[Course Overview] [Class Logistics] [Schedule] [Reading List] [Survey Topics] [Reference Books and Surveys] [Class Policies]

“What we have before us are some breathtaking opportunities disguised as insoluble problems.” — John Gardner, 1965

“Machine learning (ML), and in particular deep learning, is an attractive alternative for architects to explore. It has recently revolutionized vision, speech, language understanding, and many other fields, and it promises to help with the grand challenges facing our society. The computation at its core is low-precision linear algebra. Thus, ML is both broad enough to apply to many domains and narrow enough to benefit from domain-specific architectures. Moreover, the growth in demand for ML computing exceeds Moore’s law at its peak, just as it is fading. Hence, ML experts and computer architects must work together to design the computing systems required to deliver on the potential of ML.” – Jeff Dean, David Patterson, and Cliff Young.

“Inherent inefficiencies in general-purpose processors, whether from ILP techniques or multicore, combined with the end of Dennard scaling and Moore’s Law, make it highly unlikely, in our view, that processor architects and designers can sustain significant rates of performance improvements in general-purpose processors. Given the importance of improving performance to enable new software capabilities, we must ask: What other approaches might be promising? A clear opportunity is to design architectures tailored to a specific problem domain and offer significant performance (and efficiency) gains for that domain.” – John Hennessy and David Patterson. Turing lecture on A New Golden Age for Computer Architecture.

Overview

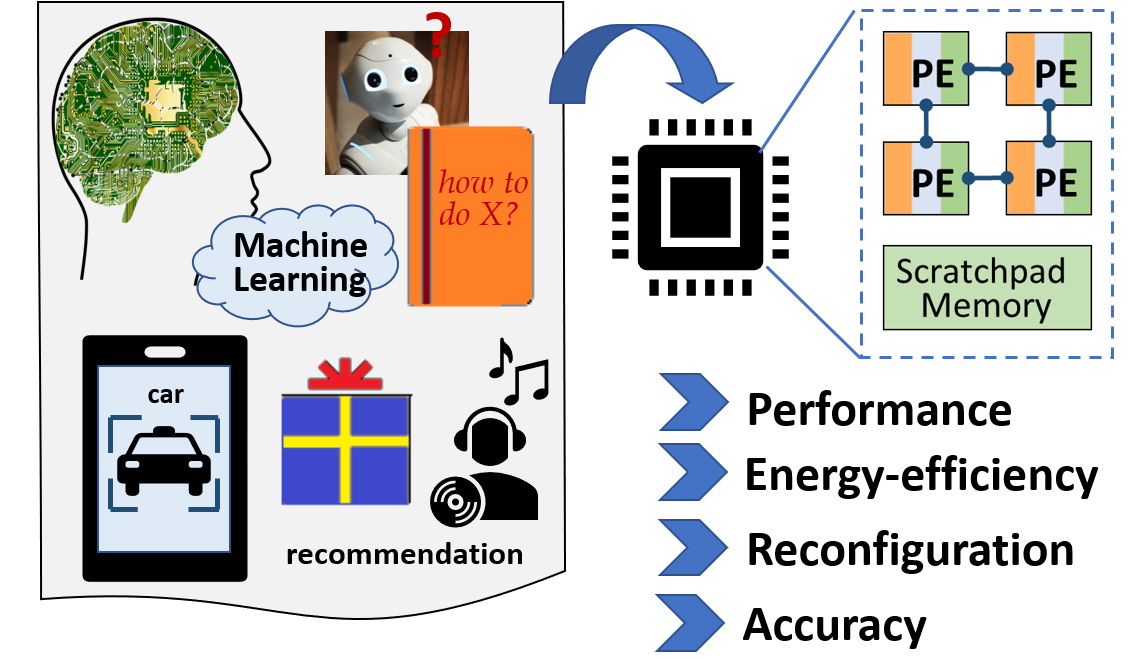

Remarkable success of machine learning (ML) algorithms have led to deployment of industrial ML accelerators throughout cloud, mobile, edge, and wearables, and from computer vision and speech processing to recommendations and graph learning. This course is intended to provide students with a solid understanding of such accelerator system designs, including implications of the various hardware and software components on various costs such as latency, energy, area, throughput, power, storage, and inference accuracy for ML tasks. The course will start off with accelerator architectures for computer vision (convolutional and feed-forward neural networks), culminating into cutting-edge advances such as accelerator systems for federated, on-device, and graph learning and industrial case studies. The course will also cover various important topics in the ML accelerator system design such as execution cost modeling, mapping and hardware exploration, compilers and ISAs for ML accelerators, multi-chip/multi-workload designs, accelerator-aware neural architecture search, and reliability and security of ML accelerators.

Learning Outcomes

This course will help students gain a solid understanding of the fundamentals of designing machine learning accelerators and relevant cutting-edge topics. After doing this course, students will be able to understand:

- The role and importance of machine learning accelerators.

- Implementation of machine learning hardware, including various computational, NoC (network-on-chip) and memory configurations.

- Libraries and frameworks for designing and exploring machine learning accelerators.

- The efficiency challenges brought by large and deep models and the need for tensor compression.

- Architectural implications of compression techniques and impact on inference accuracy.

- Characterization of accelerators under various deployment scenarios (cloud vs. edge).

- Workings of accelerators for training of the machine learning models.

- Differentiate workings of accelerators for different domains such as computer vision, language processing, graph learning, and recommendation systems.

- The need for reconfigurability in architectural components for supporting various workloads and range of specializations, and case studies that enable it.

- The programming challenges brought forth by machine learning accelerators, especially with algorithmic and hardware specializations, like sparsity and mixed-precision computations.

- The need for accelerator-aware neural architecture search.

- Various runtime optimizations for efficient inference on accelerators.

- Recent accelerator systems developed by industry and their trade-offs.

Prerequisites

A background in Computer Architecture (CSE 420 or CSE 520) and Machine Learning (e.g., courses like CSE 571, CSE 574, CSE 575, CSE 576, EEE 598/591: Machine/Deep Learning) will be advantageous.

Office Hours and Location

TBD

Assessment

Students will be evaluated based on their participation in class discussions and presentation, quality of paper review, and a topical survey paper.

Grade Components:

- Class participation – 20%

- Paper critiques and presentations – 30%

- Topical survey paper -50%

Being engaged in class discussions and carefully reviewing assigned readings in a timely manner will help generate lots of good ideas and learning.

Class Participation

In each class, preselected students will present the paper. Later, students will discuss the paper in groups, followed by a full-class discussion, and a short lecture to introduce the topic of the next class.

Paper Review

Each week, 2–3 papers will be assigned for review. Each student will write a summary, strengths and weaknesses of the paper, and point out some potential future research direction.

Late Submission Policy

Start early and plan your time well. To account for unavoidable issues, students will get three 1-day extensions for paper review submissions without asking the course instructor—use them wisely.

Topical Survey Paper

Students will work on ML-accelerator related survey in groups of 1-3 students. Each group will prepare a survey paper and slides to present their work.

Tentative Schedule

- Week 1 topic: Overview of Machine Learning and Deep Learning Models

- Week 2 topic: Introduction to DNN accelerators (CNNs, GEMMs/MLPs) and their mappings (dataflow orchestration). NOCs and memory designs for DNN accelerators.

- Week 3 topic: Accelerators for NLP (RNN, LSTM, and Transformers) and Neuromorphic architectures

- Week 4 topic: Design Libraries, Programming Languages, and Compilers for Generating Accelerators, Execution Cost Modeling, Mapping Optimizations, and Hardware/Software Design Space Exploration

- Week 5 topic: Compilers for ML Accelerator, Machine Code Generation; Automated cost model generation with machine/deep learning.

- Week 6 topic: Accelerators for Training; Quantization (precision, value similarity)

- Week 7 topic: Sparse ML Accelerators (unstructured sparsity, structured sparsity and model/accelerator codesign)

- Week 8 topic: Accelerator-aware Neural-architecture Search and Accelerator/Model Codesigns

- Week 9 topic: Accelerator Design for Mutliple workloads, Multi-chip Accelerator Designs

- Week 10 topic: ML Accelerators for Near-data Processing and In-memory Computations, Emerging Technologies such as Photonics.

- Week 11 topic: Accelerators for Recommendation Systems and Graph Learning

- Week 12 topic: Full-Stack System Infrastructures and Workload Characterizations for Various Deployment Scenarios

- Week 13 topic: Federated and On-device Learning on ML Accelerators; Runtime Optimizations

- Week 14 topic: Industry Case Studies (Established Startups)

- Week 15 topic: More Industry Case Studies

- Week 16 topic: Reliability and Security of ML Accelerators

In each week, about 2-3 papers will be discussed in the class for the week’s topic. These papers are listed topic-wise in the reading list here. In addition, the reading list contains more papers for each topic and papers for some additional related topics that may not get covered within the class due to limited class time. This comprehensive reading list serves as a reference for the necessary and optional readings for the learning and in-class discussion, as well as for the topical survey writing. In the reading list, the necessary papers typically appears at the top of the topic-wise list, and additional/optional references for each topic are indicated by a note.

Suggested Topics for Survey Writing

- Accelerators for DNN Training

- Accelerators for Recommendation Systems

- Accelerators for Graph Learning

- Accelerators for GANs

- Accelerators for Transformers-based Models

- Multi-DNN Accelerator Design

- Multi-chip DNN Accelerators

- Cost Models of DNN Accelerators

- Libraries and Frameworks for Designing DNN Accelerators

- Mapping Optimizations for DNN Accelerators

- Design Space Exploration of DNN Accelerators, including through Machine/Deep Learning

- Accelerators for On-Device and Federated Learning

- Compilers for ML Accelerators

- ISAs and Machine Code Generation for ML Accelerators

- Automated Cost Model Generation with Machine/Deep Learning

- Runtime Optimizations for DNN Accelerators

- Emerging Technologies for DNN Accelerators

- Photonics DNN Accelerators

- Security of DNN Accelerators

- Reliability of DNN Accelerators

- Industry DNN Accelerators

If you want to submit a survey on a topic that is not listed above, please contact the course instructor with the information about the topic and why do you think it is a good topic for writing a new survey.

Suggested writing format:

– At least 8 pages in ACM Computing Surveys format (single-spaced, excluding references) [Format, see “Manuscript Preparation”] OR

– At least 4 pages in IEEE journals format (double-column, excluding references) [Format; For example, select “journal/transactions” and “Transactions on Computer-Aided Design”]

Above page limit does not include references in the survey paper. There is no page limit for listing the references.

Generic guide on possible survey sections:

– Abstract and Introduction (Overview of whole survey and contributions)

– Problem/Needs (e.g., why accelerator for topic X?)

– Categorization/Taxonomy or Overview of Solution (e.g., in terms of design aspects)

– Description of each design/solution aspect as per categorization; listing of alternative methods for each aspect; qualitative/quantitative trade-offs of alternative methods for each aspect

– Example case studies

– Open challenges and Future directions

– Conclusions

Students may refer to the reference surveys to get more insights.

Reference Books

- Efficient processing of deep neural networks

(Synthesis Lectures on Computer Architecture)

Authors: Vivienne Sze, Yu-Hsin Chen, Tien-Ju Yang, and Joel S. Emer.

Publisher: Morgan and Claypool

ISBN: 978-1-68-173831-4 - Deep Learning Systems: Algorithms, Compilers, and Processors for Large-Scale Production

(Synthesis Lectures on Computer Architecture)

Authors: Andres Rodriguez

Publisher: Morgan and Claypool

ISBN: 978-1-68-173966-3

EBook: https://deeplearningsystems.ai/

Reference Surveys

- [DNN Models and Architectures] Efficient processing of deep neural networks: A tutorial and survey. Vivienne Sze, Yu-Hsin Chen, Tien-Ju Yang, and Joel S. Emer. Proceedings of the IEEE 105, no. 12 (2017): 2295-2329. [Paper]

- [Model Compression and Exploration Techniques] Model compression and hardware acceleration for neural networks: A comprehensive survey. Lei Deng, Guoqi Li, Song Han, Luping Shi, and Yuan Xie. Proceedings of the IEEE 108, no. 4 (2020): 485-532. [Paper]

- [Accelerators for Compact ML Models] Hardware acceleration of sparse and irregular tensor computations of ML models: A survey and insights. Shail Dave, Riyadh Baghdadi, Tony Nowatzki, Sasikanth Avancha, Aviral Shrivastava, and Baoxin Li. Proceedings of the IEEE 109, no. 10 (2021): 1706-1752. [Paper]

- [Deep Learning Compiler] The deep learning compiler: A comprehensive survey. Mingzhen Li, Yi Liu, Xiaoyan Liu, Qingxiao Sun, Xin You, Hailong Yang, Zhongzhi Luan, Lin Gan, Guangwen Yang, and Depei Qian. IEEE Transactions on Parallel and Distributed Systems 32, no. 3 (2020): 708-727. [Paper]

Plagiarism and Cheating

This is a research-oriented course where collaboration is welcomed. But provide credit where it’s due. Plagiarism or any form of cheating in assignments or projects is subject to serious academic penalty. To understand your responsibilities as a student read: ASU Student Code of Conduct and ASU Student Academic Integrity Policy. You may engage in intellectual discussions about reading assignments with your peers or course TAs/instructor, but all submissions must be your own, based on your understanding of the content. Your project report will resemble academic scholarly articles, where you must credit all sources, including your partners in brainstorming, published papers, and existing code repositories (e.g., from stackoverflow or github) that you have used in your implementation. Posting your projects online, including using a public Github repo, is expressly forbidden. The Github Student Developer Pack provides unlimited private repositories while you are a student.

Title IX

Title IX is a federal law that provides that no person be excluded on the basis of sex from participation in, be denied benefits of, or be subjected to discrimination under any education program or activity. Both Title IX and university policy make clear that sexual violence and harassment based on sex is prohibited. An individual who believes they have been subjected to sexual violence or harassed on the basis of sex can seek support, including counseling and academic support, from the university. If you or someone you know has been harassed on the basis of sex or sexually assaulted, you can find information and resources at https://sexualviolenceprevention.asu.edu/faqs. As mandated reporters, we are obligated to report any information we become aware of regarding alleged acts of sexual discrimination, including sexual violence and dating violence. ASU Counseling Services (https://eoss.asu.edu/counseling) is available if you wish to discuss any concerns confidentially and privately. ASU online students may access 360 Life Services at https://goto.asuonline.asu.edu/success/online-resources.html.