Contents

Introduction

Challenge:

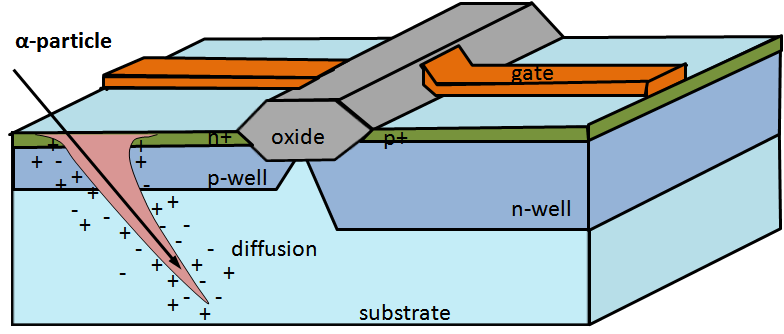

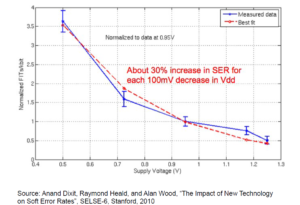

Continuous technology scaling facilitates having more transistors in an integrated circuit. For every new generation of processors, the transistors are shrinking in size, with a lower threshold voltage and narrower noise margin compared to their previous counterparts. As a result of this aggressive transistor feature scale down, devices are becoming more and more susceptible to soft errors. Soft errors or transient faults — typically caused by cosmic particle strike on transistors — can change the logic value within the transistor. Our research seeks to quantify the effect of soft errors on system reliability and develop cost effective fault tolerant techniques to mitigate the impact of soft errors.

Soft error is the phenomenon of an erroneous change in the logical value of a transistor, and can be caused by several effects, including fluctuations in signal voltage, noise in the power supply, inductive coupling effects etc., but, majority of soft errors are caused by cosmic particle strike on the chip. With technology scaling, even low-energy particles can cause soft errors. Soft error can result in incorrect results, segmentation faults, application or system crash, or even the system entering an infinite loop. ITRS predicts that transistor sizes will continue to shrink for at least a decade from 45nm to 12nm and beyond. Even with the introduction of Silicon-on-Insulator (SOI) fabrication technology, this will imply 2 – 3 orders of magnitude jump in the rate of soft errors, rising from once-per-year to once-per-day!

Quantifying the impact of soft error on system reliability

Traditionally researchers apply simulation-based statistical fault injection (SFI) to estimate the effect of soft errors on system reliability. In such techniques, simulation stops at a random cycle, the value of a randomly selected bit in simulated microprocessor gets inverted, and then, simulation continues the execution of the program with the faulty value. The impact of soft error is concluded from the difference between the output of faulty and fault-free simulation runs. However, performing fault injection experiments is an error prone, computation- and time- consuming task. Vulnerability analysis has been proposed as a promising alternative for fault injection. It carefully examines the effect of soft errors on each bit of microprocessor at every cycle of execution, and estimates the reliability of the execution of a program on a microprocessor.

One of the main focus of our reliability research group is to develop an accurate and publicly available vulnerability estimation tool. The tool is based on gem5, a popular cycle-accurate system simulator, and called gemV (gem5 + Vulnerability). The current version of the tool is available here.

Compiler for Soft Errors

Due to fast growing soft error rate and ever-increasing use of digital systems in daily life, reliability has become a must rather than an option for modern microprocessors. However, classic redundancy-based hardware fault tolerant techniques are not desirable due to their significant cost in terms of design and hardware. On the other hand, compiler-based techniques have been proposed as a flexible, cost-effective and efficient way for coping with soft errors.

Compiler can effectively change the susceptibility of a program to soft errors in numerous ways. For instance, register allocator can spill the long live-time registers into the ECC-protected memory. Compiler can also offer different error detection and recovery schemes through instruction redundancy and Check-pointing.